Data Mining and Recommender Systems

Today’s consumers are overwhelmed with choices. From the 500,000+ movies on Netflix to the 50 million+ products on Amazon, Twitter feeds, Instagram reels, TikTok videos, and more, we are bombarded with content!

Recommender systems help consumers by making product recommendations that are likely to be interest to the user such as movies, music, books (Wish they were more popular ☹️), news, search queries, social tags, videos, posts and products in general. Recommender systems may use a content-based approach, a collaborative approach, or a hybrid approach that combines both content-based and collaborative filtering.

The content-based approach recommends items that are similar to items in the user preferred or queried in the past. It relies on product features and textual item descriptions. The collaborative approach (or collaborative filtering approach) may consider a user’s social environment. It recommends items based on the opinions of other customers who have similar tastes or preferences as the user. Recommender systems use broad range of techniques from information retrieval, statistics, machine learning, and data mining to search for similarities among items and customer preferences.

Methods

Le’s take a look at some of the most popular methods for building recommender systems. We’ll start by generating synthetic user data.

Generating Synthetic User Data

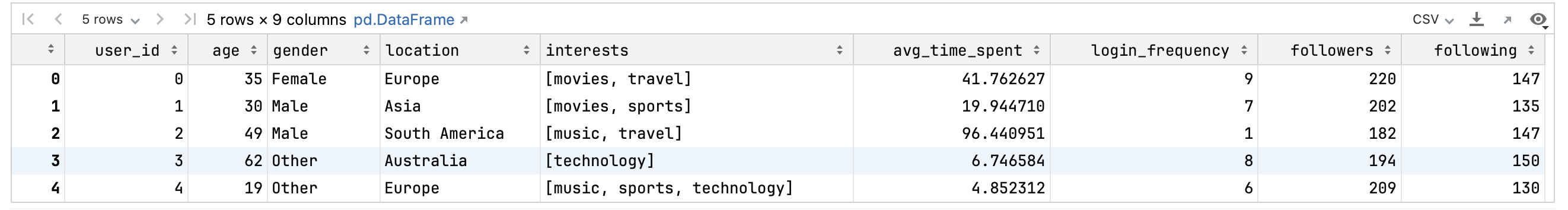

We’ll start by generating synthetic user data that mirrors the structure of real-world social media platforms.

For this, Let’s consider:

- Users:

- Demographics: Age, Gender, Location

- Interests: A list of categories they’re interested in

- Behavior: Average time spent on platform, frequency of logins

- Social: Number of followers, number of users they follow

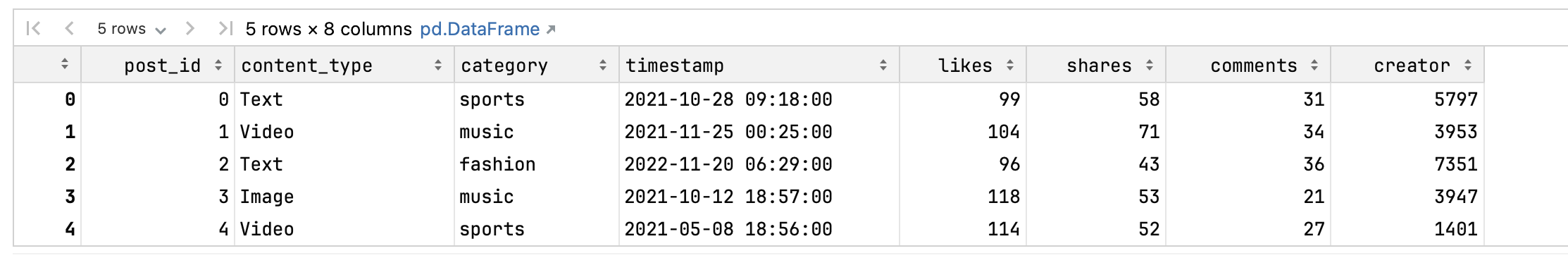

- Posts:

- Content Type: Text, Image, Video

- Category: Sports, Music, Technology, Fashion, Food, Travel, Movies

- Metadata: Timestamp, Number of Likes, Shares, Comments

- Creator: User ID of the creator

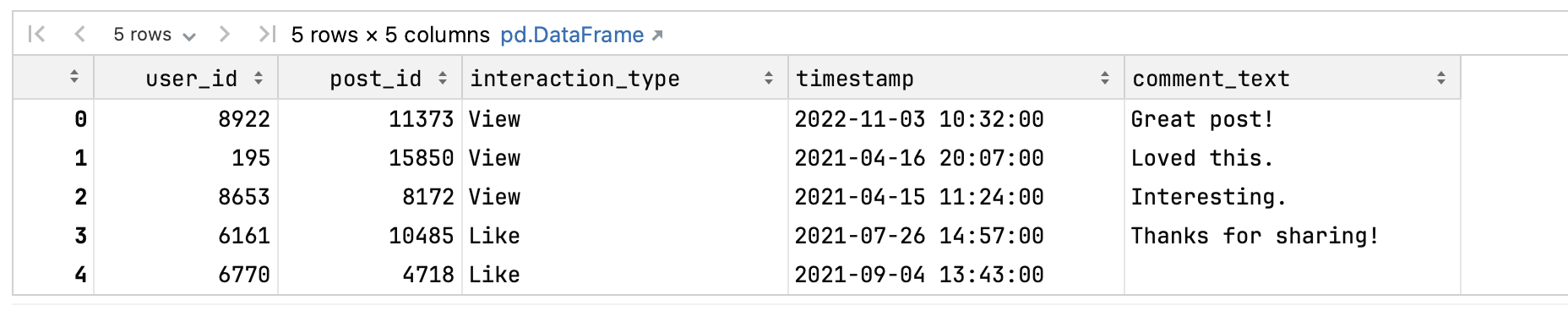

- Interactions:

- Type: Like, Share, Comment, View

- Timestamp

- Post ID and User ID

- For comments: Comment text

Let’s see this in action:

import numpy as np

import pandas as pd

# Constants for data generation

n_users = 10000

n_posts = 20000

n_interactions = 50000

# Generating user data

genders = ['Male', 'Female', 'Other']

locations = ['North America', 'Europe', 'Asia', 'Africa', 'South America', 'Australia']

interests_pool = ['sports', 'music', 'technology', 'fashion', 'food', 'travel', 'movies']

user_ids = range(n_users) # unique user ids

ages = np.random.randint(18, 70, n_users) # age between 18 and 70

genders_data = np.random.choice(genders, n_users) # gender

locations_data = np.random.choice(locations, n_users) # location

user_interests = [list(np.random.choice(interests_pool, np.random.randint(1, 4), replace=False)) for _ in

range(n_users)]

avg_time_spent = np.random.exponential(scale=30, size=n_users) # average time in minutes

login_frequency = np.random.poisson(lam=5, size=n_users) # average number of logins per week

followers = np.random.poisson(lam=200, size=n_users) # number of followers

following = np.random.poisson(lam=150, size=n_users) # number of users they follow

users = pd.DataFrame({

'user_id': user_ids, 'age': ages, 'gender': genders_data, 'location': locations_data,

'interests': user_interests, 'avg_time_spent': avg_time_spent, 'login_frequency': login_frequency,

'followers': followers, 'following': following

})

# Generating post data

content_types = ['Text', 'Image', 'Video']

categories = ['sports', 'music', 'technology', 'fashion', 'food', 'travel', 'movies']

post_ids = range(n_posts)

post_content_types = np.random.choice(content_types, n_posts)

post_categories = np.random.choice(categories, n_posts)

timestamps = pd.date_range(start="2021-01-01", end="2023-01-01", freq='T').to_numpy()

post_timestamps = np.random.choice(timestamps, n_posts)

likes = np.random.poisson(lam=100, size=n_posts)

shares = np.random.poisson(lam=50, size=n_posts)

comments = np.random.poisson(lam=30, size=n_posts)

creators = np.random.choice(user_ids, n_posts)

posts = pd.DataFrame({

'post_id': post_ids, 'content_type': post_content_types, 'category': post_categories,

'timestamp': post_timestamps, 'likes': likes, 'shares': shares, 'comments': comments,

'creator': creators

})

# Generating interactions data

interaction_types = ['Like', 'Share', 'Comment', 'View']

interaction_users = np.random.choice(user_ids, n_interactions)

interaction_posts = np.random.choice(post_ids, n_interactions)

interaction_types_data = np.random.choice(interaction_types, n_interactions, p=[0.5, 0.1, 0.1, 0.3])

interaction_timestamps = np.random.choice(timestamps, n_interactions)

comments_text = ["Great post!", "Loved this.", "Interesting.", "Thanks for sharing!", ""] * (n_interactions // 5)

interactions = pd.DataFrame({

'user_id': interaction_users, 'post_id': interaction_posts, 'interaction_type': interaction_types_data,

'timestamp': interaction_timestamps, 'comment_text': comments_text

})

users.head(), posts.head(), interactions.head()Users:

Posts:

Interactions:

Content-Based Filtering

This approach recommends items by comparing the content of the items and a user profile, with content described in terms of several descriptors or terms inherent to the item. To build a content-based recommender system based on our data, we’ll focus on the users’ interests and the posts' categories. For simplicity, we’ll compute a user’s affinity towards each category based on their interactions. We’ll then use this information to recommend posts from categories the user has shown a high affinity for.

What is Affinity Score?

Affinity scores represent a user’s interest or preference for a particular item, category, or topic based on their interactions and behaviors. In the context of recommendation systems, affinity scores help in determining how closely a user’s interests align with a particular content or product.

The idea is to assign a numerical value to a user’s interactions, reflecting the strength or depth of their engagement. For instance, reading an article might have a higher affinity score than merely clicking on it, and adding a product to a cart might have a higher affinity score than just viewing it.

Computing Affinity Scores

Given:

- $I$ - A set of all interactions (e.g., Like, Share, Comment, View).

- $w_i$ - The weight (or score) associated with interaction type $i$. This is a predefined value that reflects the depth of engagement for that interaction.

- $n_i$ - The number of times a user has performed interaction type $i$ on content belonging to a particular category.

The affinity score $A$ for a user toward a particular category is calculated as:

$$A = \sum_{i \in I} w_i \times n_i$$

In other words, for each type of interaction, you multiply the weight of that interaction by the number of times the user has performed it. Then, sum up these products for all interaction types to get the total affinity score.

For example, let’s say a user has:

- Liked ($w_{like} = 1$) 5 posts in the “music” category.

- Shared ($w_{share} = 2$) 2 posts in the “music” category.

- Commented ($w_{comment} = 2$) on 3 posts in the “music” category.

- Viewed ($w_{view} = 0.5$) 10 posts in the “music” category.

Using the formula, the affinity score $A$ for this user toward the “music” category would be:

$$A = (1 \times 5) +(2 \times 2) + $$ $$(2 \times 3) + (0.5 \times 10) = 5 + 4 + 6 + 5 = 20$$

So, the user’s affinity score for the “music” category is 20.

Now that we’ve covered the basics, let’s discuss the plan:

- Calculate the user’s affinity score for each category.

- Based on the user’s score, recommend posts that they might be interested in.

Enough talk, let’s code!

import pandas as pd

# First, we'll assign weights to each interaction type

interaction_weights = {

'Like': 1,

'Share': 2,

'Comment': 2,

'View': 0.5

}

# Map the weights to the interactions

interactions['weights'] = interactions['interaction_type'].map(interaction_weights)

# Merge the interactions with the post's category

user_category_weights = interactions.merge(posts[['post_id', 'category']], on='post_id')

# Compute the user's affinity score for each category

category_affinity = user_category_weights.groupby(['user_id', 'category']).agg(

affinity_score=pd.NamedAgg(column='weights', aggfunc='sum')).reset_index()

# 2. Recommend posts based on affinity score

def recommend_posts_for_user(user_id, num_posts=5):

# Fetch the categories the user has the highest affinity for

top_categories = category_affinity[category_affinity['user_id'] == user_id].sort_values(

by='affinity_score', ascending=False)['category'].tolist()

# Recommend posts from those categories which the user hasn't interacted with

posts_interacted = interactions[interactions['user_id'] == user_id]['post_id'].tolist()

recommended_posts = posts[~posts['post_id'].isin(posts_interacted) & posts['category'].isin(top_categories)]

# Shuffle the DataFrame for randomness

recommended_posts = recommended_posts.sample(frac=1).reset_index(drop=True)

# Return the top num_posts from the recommended list

return recommended_posts.head(num_posts)

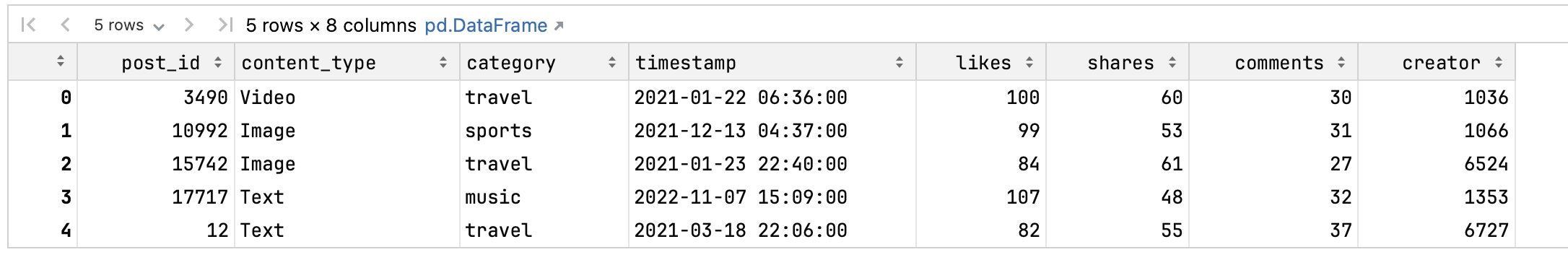

# Example: Recommend 5 posts for user with ID 101.

recommend_posts_for_user(101,num_posts=5)Output:

As we can see, the recommended posts are from categories that the user has shown a high affinity for. This is a simple example of a content-based recommender system. In practice, you can use more sophisticated techniques to generate recommendations. For instance, you can use the post’s title, description, and other metadata to compute the user’s affinity score.

Collaborative Filtering

Collaborative filtering is one of the most popular techniques used in recommendation systems. It relies on past interactions between users and items to generate recommendations. Here’s how it works:

How Collaborative Filtering Works:

The fundamental idea behind collaborative filtering is that users who have shown similar preferences in the past are likely to have similar preferences in the future. This premise drives the recommendation.

There are two main approaches to collaborative filtering:

-

User-based Collaborative Filtering:

- Concept: This method identifies users that are similar to the target user based on their past interactions.

- Example: If both user A and user B liked movies X, Y, and Z, and user A also liked movie W, then the system would recommend movie W to user B.

- Use-case: This is particularly useful in scenarios where the behavior of users is a strong indicator of preferences, like movie or music recommendations.

-

Item-based Collaborative Filtering:

- Concept: Instead of looking at user similarities, this method focuses on item similarities.

- Example: If a group of users has interacted similarly with items A and B, then those items are considered to be similar. So, if a user interacts with item A, the system can recommend item B.

- Use-case: This works well in situations where items have strong correlations, like buying patterns in e-commerce platforms.

Neural Collaborative Filtering:

Here’s a breakdown:

-

Data Preparation: The dataset contains users, their interactions with posts (like, share, comment, view), and the posts themselves. The interactions are first converted into numerical values to make them suitable for model training. For instance, a ‘Like’ is mapped to 1, a ‘Share’ to 2, and so on.

-

Embeddings: In deep learning, embeddings are a way to represent categorical variables in a continuous vector space. Here, both users and posts are embedded into vectors of a specified size (10 in this case). These embeddings capture the latent factors (hidden features) that influence the interactions between users and posts.

-

Model Architecture: The neural model takes the user and post embeddings, flattens them, and concatenates them. This concatenated vector then passes through dense layers, ultimately predicting an interaction value.

-

Training: The model is trained using mean squared error as the loss function, which measures the difference between the predicted and actual interaction values.

-

Evaluation: After training, the model’s predictions on a test set are evaluated using the Root Mean Squared Error (RMSE). RMSE provides a measure of the model’s accuracy in predicting the interaction values.

Enough talk, let’s code!

import numpy as np

import pandas as pd

from keras.models import Model

from keras.layers import Embedding, Input, Dense, Flatten, concatenate

from keras.optimizers import Adam

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

# Assuming the data generation code provided earlier is executed and we have users, posts, interactions dataframes

# 1. Label Encoding for User and Post IDs

user_enc = LabelEncoder()

users['user_encoded'] = user_enc.fit_transform(users['user_id'])

post_enc = LabelEncoder()

posts['post_encoded'] = post_enc.fit_transform(posts['post_id'])

# 2. Prepare the data for Neural Collaborative Filtering

interactions['user_encoded'] = user_enc.transform(interactions['user_id'])

interactions['post_encoded'] = post_enc.transform(interactions['post_id'])

# Convert interaction types to numerical values for the matrix

interaction_type_mapping = {

'Like': 1,

'Share': 2,

'Comment': 3,

'View': 0.5

}

interactions['interaction_value'] = interactions['interaction_type'].map(interaction_type_mapping)

# 3. Create train and test datasets

train, test = train_test_split(interactions, test_size=0.2, random_state=42)

# 4. Build the Neural Collaborative Filtering model

num_users = len(user_enc.classes_)

num_posts = len(post_enc.classes_)

embedding_size = 10

# User and post inputs

user_input = Input(shape=(1,), name="user_input")

post_input = Input(shape=(1,), name="post_input")

# Embeddings layers

user_embedding = Embedding(input_dim=num_users, output_dim=embedding_size, name="user_embedding")(user_input)

post_embedding = Embedding(input_dim=num_posts, output_dim=embedding_size, name="post_embedding")(post_input)

# Flatten embeddings

user_vec = Flatten()(user_embedding)

post_vec = Flatten()(post_embedding)

# Merge the user and post vectors

merged = concatenate([user_vec, post_vec])

# Dense layers

dense_1 = Dense(128, activation='relu')(merged)

dense_2 = Dense(64, activation='relu')(dense_1)

output = Dense(1)(dense_2)

# Compile the model

model = Model(inputs=[user_input, post_input], outputs=output)

model.compile(optimizer=Adam(0.001), loss='mean_squared_error')

# 5. Train the model

batch_size = 64

epochs = 5

model.fit([train['user_encoded'], train['post_encoded']], train['interaction_value'], batch_size=batch_size, epochs=epochs, verbose=1, validation_split=0.1)

# 6. Predict on test set

predictions = model.predict([test['user_encoded'], test['post_encoded']])

rmse = np.sqrt(np.mean((np.array(test['interaction_value']) - predictions.flatten())**2))

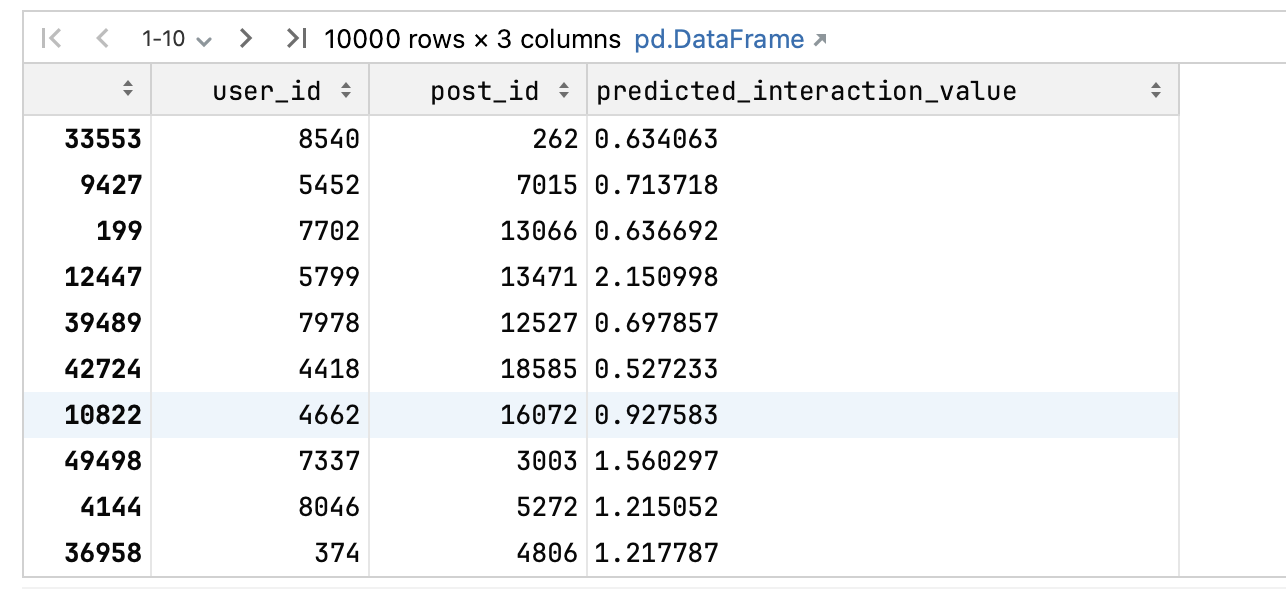

print(f"RMSE: {rmse}")Output:

As we can see, values higher than 1 typically suggest that the model believes the user is likely to have more than just a basic or minimal interaction with the post. In the context of social media, this could mean that the user is likely to like, share, or comment on the post. Values lower than 1 suggest that the user is likely to have a minimal interaction with the post, such as viewing it.

Hybrid Recommender Systems

Hybrid recommender systems combine the strengths of content-based and collaborative filtering to provide more accurate and diverse recommendations. The idea is to leverage the best aspects of each approach to overcome their weaknesses.

Let’s see how this works in practice:

# Function for Content-based Filtering score (based on your earlier code)

def get_content_based_score(user_id, post_id):

user_affinities = category_affinity[category_affinity['user_id'] == user_id]

post_category = posts[posts['post_id'] == post_id]['category'].values[0]

score = user_affinities[user_affinities['category'] == post_category]['affinity_score'].values

if score.size >0:

return score[0]

else:

return 0

# Combine scores for hybrid recommendations

def hybrid_score(user_id, post_id, alpha=0.5):

# Neural Collaborative Filtering score

user_enc_id = user_enc.transform([user_id])

post_enc_id = post_enc.transform([post_id])

ncf_score = model.predict([user_enc_id, post_enc_id])[0][0]

# Content-based score

cb_score = get_content_based_score(user_id, post_id)

# Combined score

return alpha * ncf_score + (1 - alpha) * cb_score

# Recommend posts for a user based on hybrid score

def hybrid_recommendations(user_id, num_posts=5):

# Exclude already interacted posts

interacted_posts = interactions[interactions['user_id'] == user_id]['post_id'].tolist()

remaining_posts = posts[~posts['post_id'].isin(interacted_posts)]

# Calculate hybrid scores for remaining posts

scores = [hybrid_score(user_id, post_id) for post_id in remaining_posts['post_id']]

# Get top num_posts based on scores

recommended_post_ids = [x for _, x in sorted(zip(scores, remaining_posts['post_id']), reverse=True)[:num_posts]]

return posts[posts['post_id'].isin(recommended_post_ids)]

# Example: Recommend 5 posts for user with ID 101 using hybrid approach.

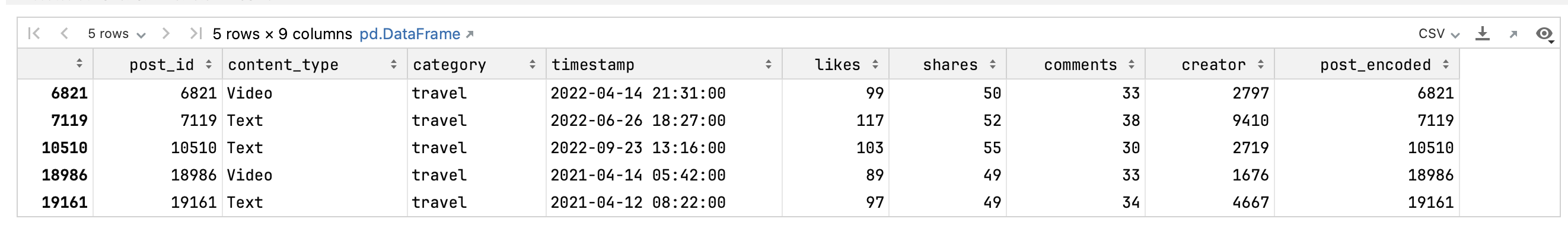

res = hybrid_recommendations(101, num_posts=5)Output:

Here we can see the 5 posts recommended by the hybrid approach for user 101. The hybrid approach combines the content-based and collaborative filtering scores to generate recommendations. The alpha parameter controls the weight given to each score. For instance, if alpha is 0.5, then the hybrid score is the average of the two scores. If alpha is 0.8, then the hybrid score is 80% collaborative filtering and 20% content-based filtering.

Conclusion

Recommender systems play a crucial role in enhancing user experience across various platforms by helping users navigate through vast amounts of content. By understanding and implementing data mining techniques, such as content-based and collaborative filtering, businesses can offer more personalized and relevant recommendations to their users. This results in increased user engagement, satisfaction, and retention.